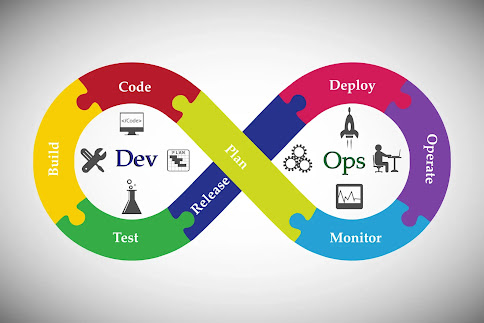

How our Code (Spark Code or HIVE Query or any other code) is deployed to Production ?

There are 4 Environment in general to make our code for production ready,

- Our Local Laptop - Integrated Development Environment (IDE)

- Development Cluster

- Testing or User Acceptance Testing (UAT) Cluster

- Production Cluster

For Example,

First Developer will create a Spark Code in his/her laptop with IDE (Eclipse or IntelliJ) and locally unit testing will be done and then the code will be moved to Development cluster and then will submit the Spark code by including all dependencies required to run code and then code will run .

After checking in dev cluster, need to get approval to move to testing cluster (UAT) from the authorities.

After completing UAT. then code will move to Production cluster.

Important Note: Real data won't be available for Development and Testing Cluster.

Before going to Production, Scheduling can be done for triggering the Spark Submit with certain interval of time. There will be separate team for Scheduling (Scheduler Example - Airflow)

Create a Separate Script with Shell or Python Scripting which will run all the spark submit files (may be 50 or 100 jar file to run) in required Environment. This make the Execution easier. This script can be shared to Scheduling team for scheduling purpose.

After coming to production, here we need to follow change request team. once we got approval we can go for production.

Now our code is live. In case any issue the support team will provide support for the production environment 24x7 and get back to Developer in case of any bug and then developer will fix it and then the changes will be deployed by following the same procedure.

Then this cycle will goes on continuously based on the requirement !!!

Credit - Data Engineering

Comments

Post a Comment